[ad_1]

Whenever you think of mobile computing hardware, Arm is likely the first company that comes to mind, or it should be. While historically Intel has been recognized as the leader in chip making, for years Arm slowly carved into a niche that eventually reached an inflection point, where computing devices no longer needed to be faster, but they needed to be more efficient and portable.

This is why Arm dominates the mobile processor market with almost every major release built on top of its architecture. We’re talking billions of chips used on phones, smart TVs, embedded applications, biometrics systems, laptops, and tablets. But why is this the case, and why have other architectures like x86 failed to take hold? In this article, we’ll give you a technical overview of what Arm is, where it came from, and why it has become so ubiquitous.

The first thing to note is that Arm doesn’t actually make processors. Instead, they design CPU architectures and license those designs to other companies like Qualcomm, Apple or Samsung who incorporate it into their processors. Since they’re all using a common standard, code that runs on a Qualcomm Snapdragon processor will also run on a Samsung Exynos processor.

What’s an ISA (Instruction Set Architecture)?

Every computer chip needs an ISA to function and that is what Arm represents. For a detailed look at how CPUs operate internally, our CPU design series is a must read.

The first step to explaining Arm is to understand what exactly an ISA is and isn’t.

It’s not a physical component like a cache or a core, but rather an ISA defines how all aspects of a processor work. This includes what type of instructions the chip can process, how the input and output data should be formatted, how the processor interacts with RAM, and more. Another way to put it: an ISA is a set of specifications while a CPU is a realization or implementation of those specifications. It’s a blueprint for how all the parts of a CPU will operate.

ISAs specify the size of each piece of data, with most modern processors using a 64-bit model. While all processors perform the three basic functions of reading instructions, executing those instructions, and updating their state based on the results, different ISAs may further break these steps down.

A complex ISA like x86 will typically divide this process into several dozen smaller operations to improve throughput. Tasks like branch prediction for conditional instructions and prefetching future pieces of data are also specified by an ISA.

In addition to defining the micro-architecture of a processor, an ISA will specify a set of instructions that it can process. Instructions are what a CPU executes each cycle and are produced by a compiler. There are many types of instructions such as memory reading/writing, arithmetic operations, branch/jump operations, and more. An example might be “add the contents of memory address 1 to the contents of memory address 2 and store the result in memory address 3.”

Each instruction will typically be 32 or 64 bits long and have several fields. The most important is the opcode which tells the processor what specific type of instruction it is. Once the processor knows what type of instruction it is executing next, it will fetch the relevant data needed for that operation. The location and type of data will be given in another portion of the opcode. Here are some links to portions of the Arm and x86 opcode lists.

RISC vs. CISC

Now that we have a basic idea of what an ISA is and does, let’s start looking at what makes Arm special.

The most significant aspect is that Arm is a RISC (Reduced Instruction Set Computing) architecture while x86 is a CISC (Complex Instruction Set Computing) architecture. These are two major paradigms of processor design and both have their strengths and weaknesses.

With a RISC architecture, each instruction directly specifies an action for the CPU to perform and they are relatively basic. On the other hand, instructions in a CISC architecture are more complex and specify a broader idea for the CPU.

This means a CISC CPU will typically break each instruction down further into a series of micro-operations. A CISC architecture can encode much more detail into a single instruction which can greatly improve performance.

For example, a RISC architecture might just have one or two “Add” instructions while a CISC architecture may have 20 depending on the type of data and other parameters for the calculation. A more detailed comparison between RISC and CISC can be found here.

| CISC | RISC |

| Push complexity to hardware | Push complexity to software |

| Many different types and formats for instructions | Instructions follow similar format |

| Few internal registers | Many internal registers |

| Complex decoding to break up instruction parts | Complex compiler to write code with granular instructions |

| Complex forms of memory interaction | Few forms of memory interaction |

| Instructions take different number of cycles to finish | All instructions finish in one cycle |

| Difficult to divide and parallelize work | Easy to parallelize work |

Another way to look at it is by comparing it to building a house. With a RISC system, you only have a basic hammer and saw, while with a CISC system, you have dozens of different types of hammers, saws, drills, and more. A builder using a CISC-like system would be able to get more work done since their tools are more specialized and powerful. The RISC builder would still be able to get the job done, but it would take longer since their tools are much more basic and less powerful.

You’re now likely thinking “why would anyone ever use a RISC system if a CISC system is so much more powerful?”

You’re now likely thinking “why would anyone ever use a RISC system if a CISC system is so much more powerful?” Performance is far from the only thing to consider though. Our CISC builder has to hire a bunch of extra workers because each tool requires a specialized skill set. This means the job site is much more complex and requires a great deal of planning and organization. It’s also much more costly to manage all these tools since each one may work with a different type of material. Our RISC friend doesn’t have to worry about that since their basic tools can work with anything.

The home designer has a choice as to how they want their home built. They can create simple plans for our RISC builder or they can create more complex plans for our CISC builder. The starting idea and finished product will be the same, but the work in the middle will be different.

In this example, the home designer is equivalent to a compiler. It takes as input code (home drawing) produced by a programmer (home designer), and outputs a set of instructions (building plans) depending on what style is preferred. This allows a programmer to compile the same program for an Arm CPU and an x86 CPU even though the resulting list of instructions will be very different.

We need less power!

Let’s circle back to Arm again. If you’ve been connecting the dots, you can probably guess what makes Arm so appealing to mobile system designers. The key here is efficiency.

In an embedded or mobile scenario, power efficiency is far more important than performance. Almost every single time, a system designer will take a small performance hit if it means saving power. Until battery technology improves, heat and power consumption will remain the main limiting factors when designing a mobile product. That’s why we don’t see large desktop-scale processors in our mobile phones. Sure, they are orders of magnitude faster than mobile chips, but your phone would get too hot to hold and the battery would last just a few minutes. While a high-end desktop x86 CPU may draw 200 Watts at load, a mobile processor will max out around 2 to 3 Watts.

You could certainly make a lower-powered x86 CPU, but the CISC paradigm is best suited for more powerful chips. That’s why we don’t often see Arm chips in desktops or x86 chips in phones; they’re just not designed for that. Why is Arm able to achieve such good energy efficiency? It all goes back to its RISC design and the complexity of the architecture. Since it doesn’t need to be able to process as many types of instructions, the internal architecture can also be much more simple. There is also less overhead in managing a RISC processor.

These all translate directly into energy savings. A simpler design means more transistors can directly contribute to performance rather than being used to manage other portions of the architecture. A given Arm chip can’t process as many types of instructions or as quickly compared to a given x86 chip, but it makes up for that in efficiency.

Say hello to my little friend

Another key feature that Arm has brought to the table is the big.LITTLE heterogeneous computing architecture. This type of design features two companion processors on the same chip.

One will be a very low-power core while the other is a much more powerful core. The chip will analyze the system utilization to determine which core to activate. In other scenarios, the compiler can tell the chip to bring up the more powerful core if it knows a computationally intensive task is coming.

If the device is idle or just running a basic computation, the lower-power (LITTLE) core will turn on and the more powerful (big) core will turn off. Arm has stated that this can deliver up to a 75% savings in power. Although a traditional desktop CPU certainly lowers its power consumption during periods of lighter load, there are certain parts that never shut off. Since Arm has the ability to completely turn off a core, it clearly outperforms the competition.

Processor design is always a series of trade-offs at every step of the process. Arm went all-in on the RISC architecture and it has paid off handsomely. Back in 2010, they had a 95% market share on mobile phone processors. This has gone down slightly as other companies have tried to enter the market, but there’s still nobody even close.

Licensing and widespread use

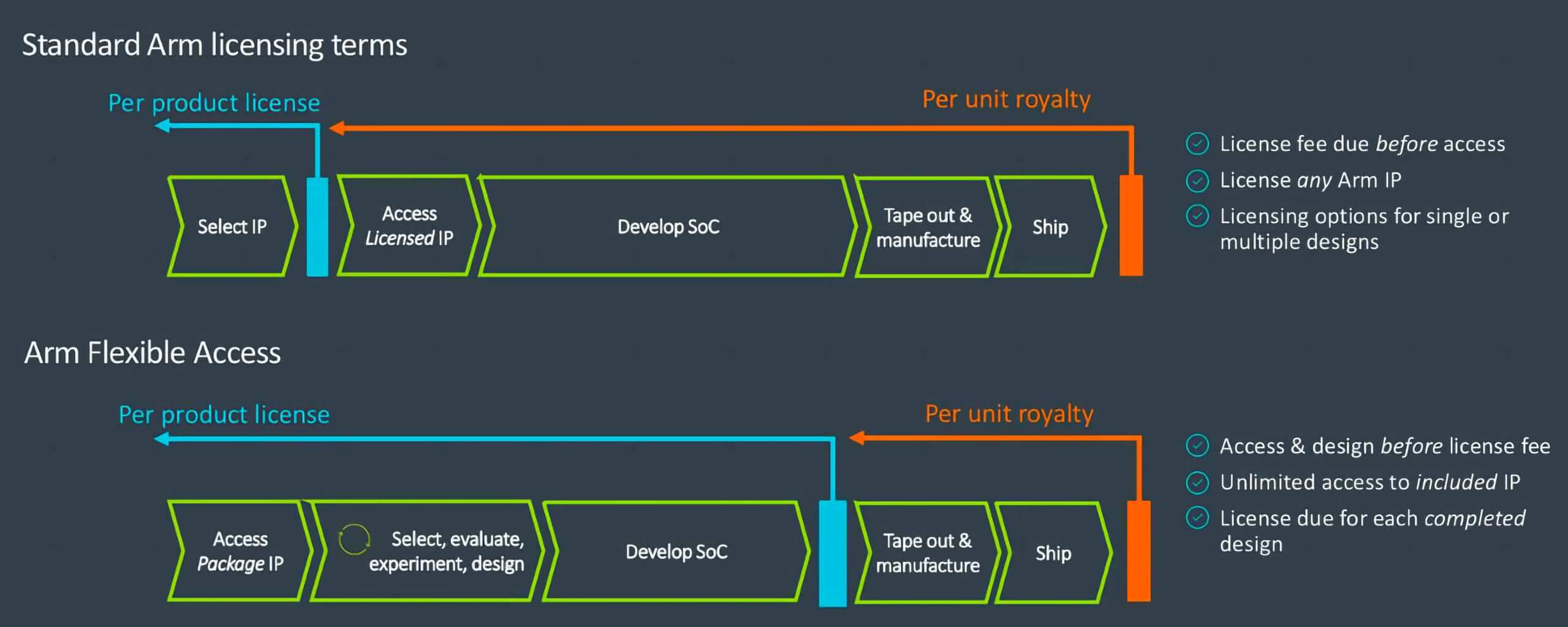

Arm’s licensing approach to business is another reason for their market dominance. Physically building chips is immensely difficult and expensive, so Arm doesn’t do that. This allows their offerings to be more flexible and customizable.

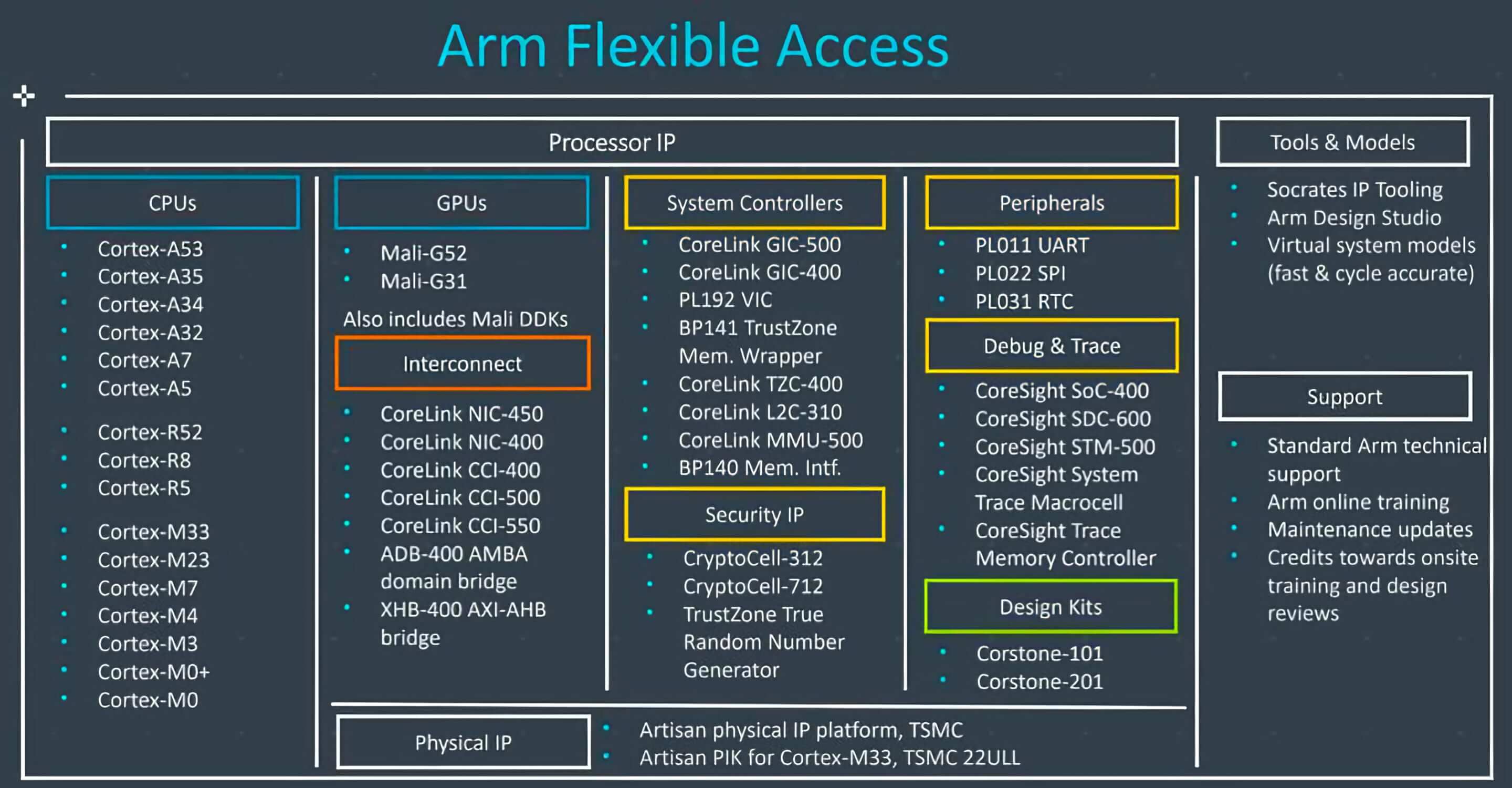

Depending on the use case, a licensee can pick and choose features that they want and then have Arm select the best type of chip for them. Customers may also design their own proprietary chips and implement only some of Arm’s instruction sets. The list of tech companies using Arm’s architecture is too large to fit here, but to name a few: Apple, Nvidia, Samsung, AMD, Broadcom, Fujitsu, Amazon, Huawei and Qualcomm all use Arm technology in some capacity.

Beyond powering smartphones (handheld computers), Microsoft has been pushing the architecture for its Surface and other lightweight devices. We wrote a review on first generation Windows 10 on Arm devices a couple of years ago, and while that effort didn’t take the OS to the finish line yet, we’ve since seen newer and better initiatives like the Surface Pro X.

Apple has also brought macOS to Arm and their first generation of M1-powered devices are showing positive signs of laptops that work nearly as efficiently as phones do. When applications are natively compiled in Arm, these Macs are showing not only efficiency but performance prowess.

Even in the datacenter, for years, Arm’s promise was primarily about power savings — a critical factor when you’re talking about thousands and thousands of servers. However recently they’ve seen more adoption on large-scale computing installations that aim to offer both power and performance improvements over existing solutions from Intel and AMD. Frankly, that’s not something that many people expected could happen so soon.

Arm has also built up a large ecosystem of supplemental Intellectual Property (IP) that can run on their architectures. This includes things like accelerators, encoders/decoders, and media processors that customers can buy the licensing rights for to use in their products.

Arm is also the architecture of choice for the vast majority of IoT devices.

Amazon’s Echo and the Google Home Mini both run on Arm Cortex processors. They have become the de facto standard, and you need a really good reason to not use an Arm processor when designing mobile electronics.

You fit all that in one chip?

In addition to their central ISA business unit, Arm has aggressively expanded into the system on a chip (SoC) space. The mobile computing market has shifted towards a more integrated design approach as space and power requirements have gotten tougher. A CPU and an SoC have many similarities, but SoCs are the next generation of computing.

A CPU and an SoC have many similarities, but SoCs are the next generation of computing.

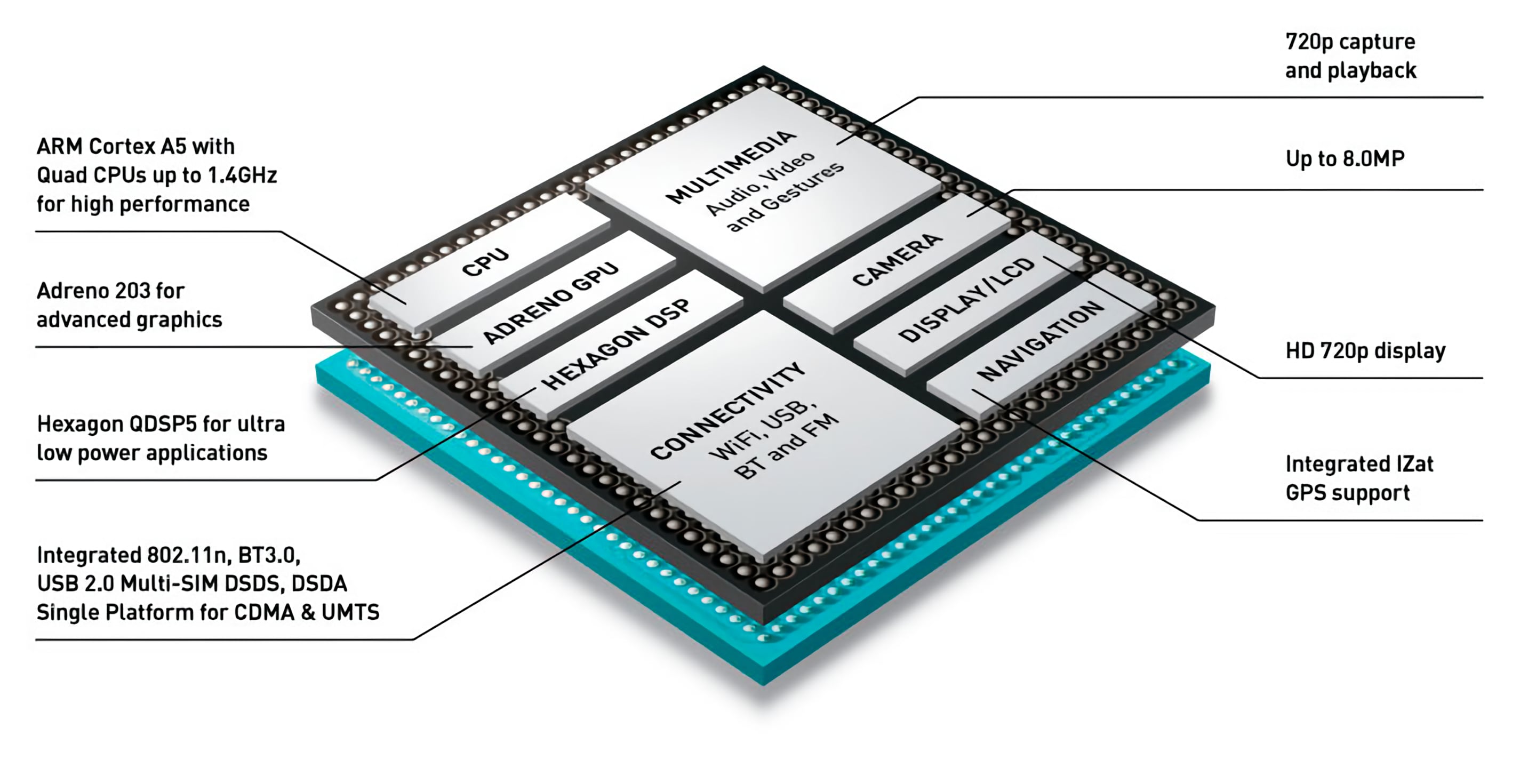

A system-on-a-chip does exactly what it sounds like. It combines many distinct components onto one chip to increase efficiency. Imagine shrinking an entire desktop motherboard down into a single chip and that’s what an SoC is. It usually has a CPU, graphics processor, memory, peripheral controllers, power management, networking, and some accelerators.

Before this design was adopted, systems would need an individual chip for each of these functions. Communication between chips can be 10 to 100x slower and use 10 to 100x more power than an internal connection on the same die. This is why the mobile market has embraced this concept so strongly.

Representation of the many features in an Arm-based Qualcomm SoC

System on chips are not suitable for every type of system. Traditionally we haven’t seen desktops or mainstream laptops with SoCs because there’s a limit to how much stuff you can cram into a single chip. Fitting the functionality of an entire discrete GPU, an adequate amount of RAM, and all the required interconnects on a single chip is not something chip makers have been capable of doing, until just recently. Apple’s M1 is seen as the first mainstream design of that paradigm shift. Just like the RISC paradigm, SoCs have been great for low-power designs but not so much for higher performance ones, that could change soon.

By now, you’ve hopefully gotten an understanding why Arm became so dominant in mobile computing. Their RISC ISA model allows them to license plans to chip manufacturers. By going with the RISC model, they are maximizing power-efficiency over performance. This is the name of the game in mobile computing, and Arm are grandmasters. But there’s still more to come, and Arm’s influence extends to just about every person on the planet.

Keep Reading. Hardware at TechSpot

[ad_2]

Source link