[ad_1]

Autonomous vehicles hold the promise of making driving safer for everyone by removing the most common cause of traffic accidents, human drivers. However, such vehicles may pose a completely different type of risk to drivers, passengers and even pedestrians.

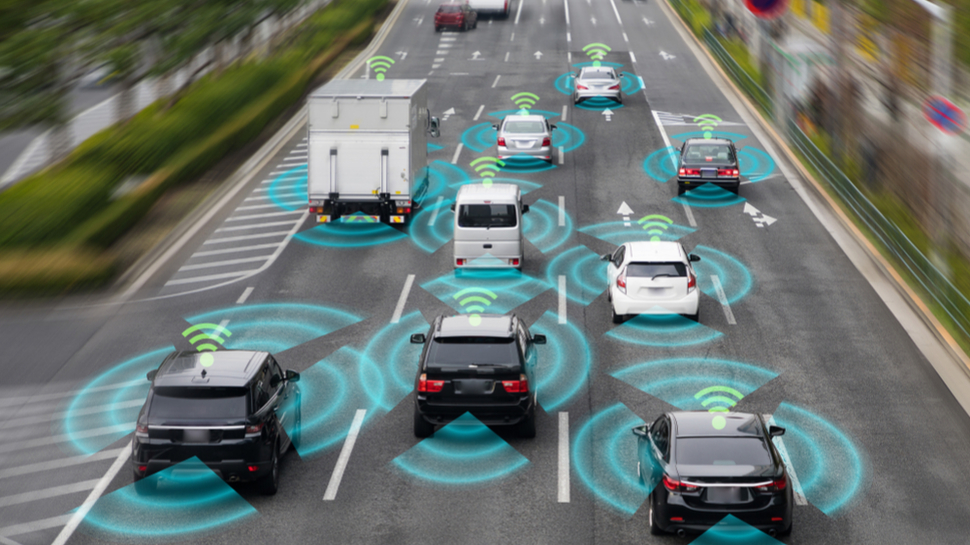

In order to drive by themselves, autonomous vehicles use AI systems that employ machine learning techniques to collect, analyze and transfer data to make decisions on the road that are normally made by human drivers. However, just like all IT systems, these systems are vulnerable to attacks that could compromise how self-driving vehicles function.

The European Union Agency for Cybersecurity (ENISA) and the Joint Research Centre (JRC) have published a new report titled “Cybersecurity Challenges in the Uptake of Artificial Intelligence in Autonomous Driving” that sheds light on the risks posed by using AI in autonomous vehicles and provides some recommendations on how to mitigate them.

Director-General of the JRC, Stephen Quest provided further insight on the report and its findings in a press release, saying:

“It is important that European regulations ensure that the benefits of autonomous driving will not be counterbalanced by safety risks. To support decision-making at EU level, our report aims to increase the understanding of the AI techniques used for autonomous driving as well as the cybersecurity risks connected to them, so that measures can be taken to ensure AI security in autonomous driving.”

Securing AI in autonomous vehicles

The AI systems used by autonomous vehicles have to work constantly to recognize traffic signs, road markings and other vehicles while estimating their speed and planning their path.

In addition to unintentional threats such as sudden malfunctions, these systems are also vulnerable to intentional attacks with the aim of interfering with their AI systems. For instance, adding paint to a road or stickers on a stop sign could prevent an autonomous vehicle’s AI system from working properly which could lead to it classifying objects incorrectly in a way that could make the vehicle behave dangerously.

In order to improve the AI security in autonomous vehicles, the report recommends that regular security assessments of AI components are conducted throughout their lifecycle. The report also suggests that risk assessments are undertaken to identify potential AI risks.

These security issues will need to be solved before autonomous vehicles can become a common sight on roads in the EU and all over the world.

Via Enisa

[ad_2]

Source link