[ad_1]

Facebook’s Oversight Board will soon make its most consequential decision yet: whether or not Donald Trump’s “indefinite suspension” from Facebook and Instagram should be lifted.

The ruling will be the biggest test yet for the Oversight Board, Facebook’s most ambitious attempt yet to prove that it can regulate itself. The Trump decision will also likely shape public perception of the organization, which has so far issued less than dozen decisions.

But the Oversight Board, which has been widely described as “Facebook’s Supreme Court,” was set up to deal with more than just Trump. The Facebook-funded organization is meant to help the social network navigate its most complicated and controversial decisions around the world. It could also end up influencing Facebook’s broader policies — if the company allows it.

Facebook’s ‘Supreme Court’

The board itself has only been functional for less than a year, though the organization actually dates back . That’s when a Harvard professor and longtime friend of Facebook COO Sheryl Sandberg reportedly proposed that Facebook create a kind of “Supreme Court” for its most controversial content moderation decisions. That idea formed the basis for what we now know as the Oversight Board.

According to Facebook, the Oversight Board is meant to be fully independent. But the social media company provided the initial $130 million in funding — meant to last six years — and helped choose the board’s members. Throughout the process, Mark Zuckerberg was “heavily involved in the board’s creation,” The New Yorker reported in its into the origins and early days of the Oversight Board.

On the other hand, the Oversight Board has gone out of its way to emphasize its independence. Its public policy manager, Rachel Wolbers, even recently suggested that the board could one day weigh content moderation decisions for other platforms. “We hope that we’re going to do such a great job that other companies might want our help,” she said at an appearance at SXSW.

For now, the board has 19 members from all over the world (there were originally 20, but one left in February the Justice Department). Eventually, it will expand to 40, though its allows for the exact number to “increase or decrease in size as appropriate.

Its first Alan Rusbridger, former editor-in-chief of The Guardian; Helle Thorning-Schmidt, the former prime minister of Denmark; and John Samples, the vice-president of the libertarian Cato Institute. All members “have experience in, or experience advocating for, human rights,” the board. And all members receive for their part-time work with the organization.

However, unlike the actual Supreme Court, the Oversight Board comes with term limits. Members are limited to three three-year terms.

How the Oversight Board works

Facebook takes down thousands of posts every day, but only a tiny fraction of those take-downs will ever become official Oversight Board cases. For those that do, there are a few ways a case may make its way up to the board.

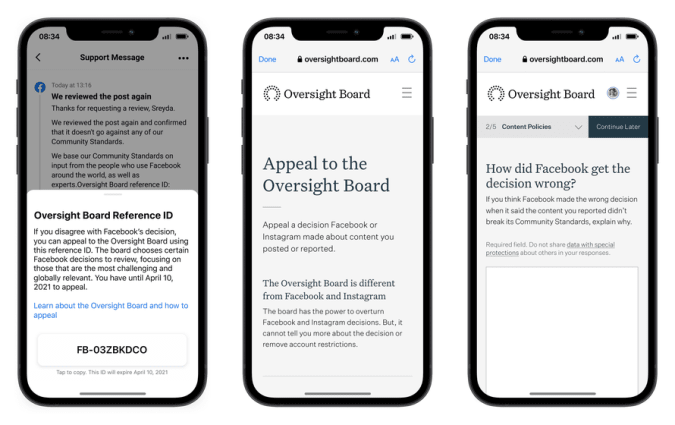

When Facebook takes down a post for breaking its rules, users have the option to appeal the decision. Sometimes, those appeals result in Facebook reversing its decision. But in cases when Facebook chooses not to reinstate a piece of content, users have the option l to the Oversight Board as a last resort. Again, making an appeal is no guarantee that the board will take up the case. Of the more than 300,000 appeals it’s received, only 11 cases have been selected.

This week, Facebook announced that it would expand the types of content the board could weigh in on by enabling users to make another type of appeal. Instead of contesting content Facebook had removed, users will now have the ability to appeal content the company has chosen .

Under that procedure, users are first required to go through Facebook’s reporting process. If the company ultimately decides to leave the reported post up, they’ll alert the user who reported it, along with a reference ID that allows them to appeal to the Oversight Board. One notable difference compared with the appeals process for take-downs is that are able to appeal the same post in these “leave up” cases.

Finally, Facebook policy officials also have the ability to escalate “significant and difficult” decisions directly to the board without waiting for any kind of appeal process to play out. Trump’s suspension was one such case. But the company a case involving health misinformation about COVID-19 to the board, which ultimately overturned the decision to remove a post criticizing the French government over COVID-19 treatments.

Once the board makes a decision, Facebook is required to implement it. The company has gone out of its way to point out that no one, , is able to overrule the Oversight Board. At the same time, Facebook is only bound to implement the board’s decisions on the specific cases they rule on, though the company says it will make an effort to apply the decision to “identical content with parallel context.”

Still, the board can exert some influence on the social network’s underlying policies — at least in theory. In addition to each takedown/leave up decision, the board also weighs in on the company’s rules and makes its own suggestions. Facebook is required to respond to these recommendations but, crucially, isn’t required to follow its advice.

So while the board can wield considerable power in specific cases, such as the upcoming Trump decision, Facebook still has the final say when it comes to its own policies. This has led from advocacy groups and other organizations who say a board charged with “oversight” should also be able to influence other big issues, like advertising policies and Facebook’s algorithms.

What it’s done so far

The board has only ruled on seven cases, and Facebook’s initial decisions in five of those. (There has been that the board may be inclined to reinstate Trump’s account, but it so far has given no indication how it will rule.)

Tellingly, the board has called some of Facebook content policies “inappropriately vague” or not “sufficiently clear to users.” And many of its initial recommendations to Facebook have encouraged the company to communicate more clearly with users. Likewise, the board has shown some skepticism of Facebook’s use of automation in moderation decisions, and has said users should know when a post is removed as a result of automated detection tools.

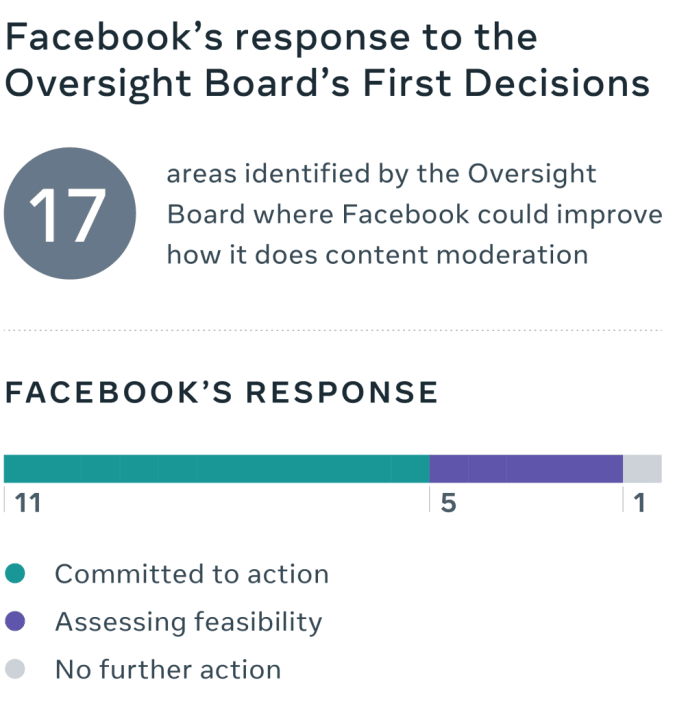

Just how much sway the board will have over broader policies, however, is less clear. Facebook recently issued its to the Oversight Board’s initial set of policy recommendations, and its commitments were somewhat tenuous. In a couple areas, the company did make some noteworthy changes. For example, it agreed to clarify the nudity policy for Instagram, and it opted to better explain its policies around vaccine misinformation.

In other areas, Facebook’s responses were more cagey. The company made several vague commitments to increase “transparency” but offered few specifics. In response to other recommendations, the company simply said that it was “assessing feasibility” of the changes.

When it comes to the Trump decision, the board has suggested that it will also weigh in on Facebook policies . But again, there’s no requirement for the social network to implement any changes.

What we do know, is that the board is already treating the Trump decision differently than other cases. With the original 90-day deadline just days away, the board announced that it was , citing the more than 9,000 public comments it had received. The decision is now expected “in the coming weeks.”

[ad_2]

Source link